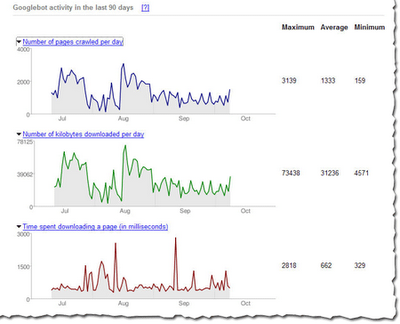

Googlebot activity reports

Check out these cool charts! We show you the number of pages Googlebot's crawled from your site per day, the number of kilobytes of data Googlebot's downloaded per day, and the average time it took Googlebot to download pages. Webmaster tools show each of these for the last 90 days. Stay tuned for more information about this data and how you can use it to pinpoint issues with your site.

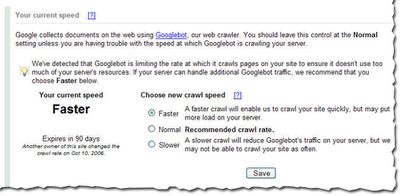

Crawl rate control

Googlebot uses sophisticated algorithms that determine how much to crawl each site. Our goal is to crawl as many pages from your site as we can on each visit without overwhelming your server's bandwidth.

We've been conducting a limited test of a new feature that enables you to provide us information about how we crawl your site. Today, we're making this tool available to everyone. You can access this tool from the Diagnostic tab. If you'd like Googlebot to slow down the crawl of your site, simply choose the Slower option.

If we feel your server could handle the additional bandwidth, and we can crawl your site more, we'll let you know and offer the option for a faster crawl.If you request a changed crawl rate, this change will last for 90 days. If you liked the changed rate, you can simply return to webmaster tools and make the change again.

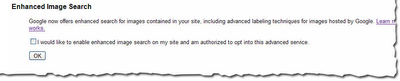

Enhanced image search

You can now opt into enhanced image search for the images on your site, which enables our tools such as Google Image Labeler to associate the images included in your site with labels that will improve indexing and search quality of those images. After you've opted in, you can opt out at any time.

Number of URLs submitted

Number of URLs submittedRecently at SES San Jose, a webmaster asked me if we could show the number of URLs we find in a Sitemap. He said that he generates his Sitemaps automatically and he'd like confirmation that the number he thinks he generated is the same number we received. We thought this was a great idea. Simply access the Sitemaps tab to see the number of URLs we found in each Sitemap you've submitted.

As always, we hope you find these updates useful and look forward to hearing what you think.

As always, we hope you find these updates useful and look forward to hearing what you think.